NEWS

Apple ’s WWDC22: what about Augmented Reality?

By Immersiv • 17th June 2022 • 5 min

Last week, Apple’s huge, highly anticipated worldwide developer conference (WWDC) took place from Monday to Friday. iOS 16, iPadOS 16, macOS 13 Ventura, and watchOS 9 were introduced, as well as the new Apple Silicon M2 chip, successor to the powerful M1 chip. But the big announcement that everybody was expecting… never came.

So, Apple didn’t unveil its long-time rumored mixed-reality headset at WWDC22, nor did it introduce RealityOS, the future operating system expected to power the device. But does this mean that AR was completely absent from the event? Not really!

ARKit 6 was introduced (Apple’s augmented reality framework), and if you’re reading a bit between the lines, you can see some hints that Apple is prepping the arrival of its AR/VR headset.

But let’s hear what our very own Apple expert thinks about all this.

Brian has been developing on iOS for 6 years and working on AR projects since 2020. At Immersiv, he’s building our iOS native apps.

Brian Corrieri – iOS developer at Immersiv.

What were your expectations from WWDC22?

Everybody was expecting big AR announcements and big announcements in general. Among the Apple developer community, we were convinced for some time now that no headset nor RealityOS would be announced during WWDC22. But still, we were expecting a lot of small things that we could have later integrated directly into the device – some announcements allowing us to get ready for the release of Apple’s MR headset, preparing for the journey, the user experience. There even was a New York Times article right before WWDC which raised expectations. Usually, it’s always the same few journalists writing about rumors. Having a notable mainstream newspaper telling us that this year’s WWDC would be the year of AR got us very excited.

I was expecting two main features.

First, adding hands-interactions in ARKit, enabling users to touch and interact with virtual objects directly with their hands. This announcement would have been huge, and a big clue in terms of development for the headset. It would have allowed us to start working on the UI and UX of future products, helping us prepare for the headset’s arrival.

The second announcement I was waiting for was the possibility to add SwiftUI views in RealityKit (the framework used for 3D rendering in AR). It might seem obvious like that, but it’s still not possible today! So you can’t display an app floating around you in AR. This feature needs to come for sure to RealityOS if you want to be able to merge the virtual and physical worlds together.

But none of this was announced at WWDC22. We didn’t even hear the word “Augmented Reality” during the opening keynote (it’s the first time since ARKit was introduced in 2017!). I think maybe Apple saw all the rumors, the leaks – like the headset being demoed to Apple’s board of directors. And Tim Cook tried his best to keep the maximum of AR-related information for the big reveal. The AR/VR headset announcement is the most anticipated Apple event since the iPhone announcement in 2007. Apple can’t miss it, and they have to hit hard! If we had all these pieces of information during WWDC, we would have lost some of the wow effect at the time of the reveal. So I think this is why we didn’t get any big AR-related announcements with iOS 16. It’s all calculated.

Tim Cook greets developers at WWDC22 – courtesy of Apple

Ok, we didn’t get any headset nor a RealityOS. But was AR truly absent from the event?

This is where it gets really interesting: even if it was forgotten during the keynote, AR was there during some of the online sessions of the week.

First of all, we have some evolution in ARKit since we are passing from ARKit 5 to ARKit 6. Concretely there are not a lot of new features, but still, nice improvements are worth noting, like the possibility to capture AR experiences in 4K, support for HDR, or improvements in motion capture. What is great news for us at Immersiv is that we can now have access to HDR and exposure camera settings. Sure, it’s not a revolution. But it is very useful to fine-tune our AR experiences.

There was also RoomPlan’s announcement. RoomPlan is a new Swift API that allows you to scan a room (up to 9m by 9m). The API will understand its dimensions and objects and will render it all in a 3D mesh that can be exported in usdz format. At first, this may seem like an API for real estate and architects. But why would Apple make an API for such a specific market? It is surprising coming from Apple… Perhaps, we can see the beginning of what the headset will allow: a more precise scan of its environment. And from there, it’s a whole field of possibilities that is opening.

Apple RoomPlan – courtesy of Apple

So that’s it for the direct AR advancements that we had during WWDC. Now if we want to see more AR, we have to read a bit between the lines.

We can mention two or three interesting things, like the possibility to use the ultra-wideband chip – the same one found in the latest iPhones and Airtag – to locate objects very precisely in the environment. Technically, the Nearby Interactions API has been available for quite some time. But now Apple is bringing this API to ARKit. This allows us to easily guide the user to an object in space with augmented reality. A bit like the AirTag experience.

This improvement will without a doubt be useful when the headset will hit the market. A use case we are immediately thinking about at Immersiv would be to guide the fans within stadiums for example.

Some news was also given about location anchors. The possibility of anchoring geolocated AR objects has been available for 2 years in the US. Last year it came to London, and this year new cities were added in Australia, Japan, and Singapore. And Apple also announced that it would come to Paris by the end of the year! It’s great news for us! This truly is a sign of Apple taking AR outside. It enables so many possibilities and I can’t wait to try it out.

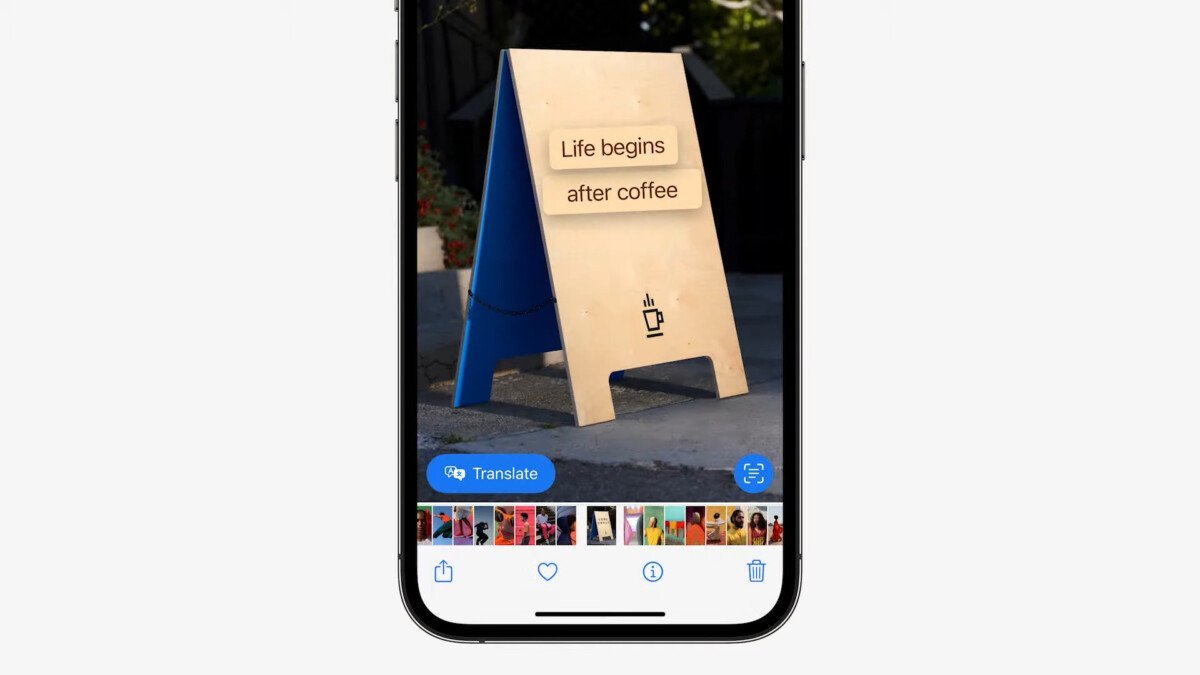

We also had some other improvements to live text. It arrived last year, but this year it goes even further by detecting text in video content and offering live translation, currency conversion, etc. And the Live Text API is now available for developers.

Apple live text translation API – courtesy of Apple

Overall there are some big advancements in detection and machine learning. Now, you can cut out the background of an image on your Apple device in one click. And it works on any image across the system, whether it’s on Safari, or in your gallery… This kind of extremely advanced machine learning technology will be very useful for the AR/VR headset. We can imagine that Apple’s headset will be able to do this kind of thing on the fly and offer very, very interesting experiences.

Still, in the image detection and analysis field, Visual Lookup lets you identify birds, insects, and statues – until now it was only for dogs and cats, art, and landmarks. In a headset, you could imagine instant access to this kind of information, being displayed to you live in AR.

But like last year, we are still hungry for more.

What do you think are the main challenges Apple is facing today regarding its AR headset? How to explain it’s taking this long?

Well, for one part, there is everything I already explained earlier regarding the leaks, the rumors, and the pressure put on Apple surrounding such a big announcement.

But we can’t ignore the significant technical challenges that they are facing. According to the rumors, the headset will be a fully standalone device, extremely powerful with very high-quality displays – we are hearing an M1 chip and 8K micro led for each eye. Such a device would require a lot of power, and we can already see with smaller, not so ambitious devices, that overheating is a big issue. Apple is no magician. They are facing the same problems as others. But with such a great ambition in terms of performance and user experience, the bar is set very high. Also, all eyes are on them, so the product that will hit the market cannot be anything but flawless.

What was the most exciting news for you as an AR dev? And for you personally?

As an AR developer, having control over HDR and exposure settings was a big hit. But personally, it’s the lock screen feature that gave me chills!

Tim Cook presenting the new iOS 16 lock screen features – courtesy of Apple

What are your prognostics/expectations regarding Apple’s AR headset?

It’s hard to give a precise date. There are so many things to take into consideration! Of course, we’re hoping for the sooner. But we can’t forget also about the AR glasses Apple has been working on. With these two huge projects on the pipe, we are in the expectation of some very exciting news!